A number of contact tracing mobile apps have already been proposed [1, 2, 3] and the NHS in the UK is preparing to launch its own version [4]. More recently an unprecedented coalition between Apple and Google (who together enjoy more than 98% of the mobile OS market share) proposed changes in their mobile operating systems to support contact tracing applications while claiming to respect users’ privacy [5, 20]. The overall consensus is to avoid any direct use of location information and instead focus on proximity protocols such as Bluetooth to determine contact. In this article we will not analyze the choice of wireless technology – this is a separate issue on its own. Instead, we will analyze some of the security and trust implications in the proposed smartphone-assisted tracing solutions. We will end up with a proposal on three necessary security measures mobile operating system providers should take before deploying tracing capabilities on users’ devices: user control; privilege of operation; and isolation of execution.

Who’s responsibility is it? Coverage and Fast!

There are multiple potential providers of a smartphone-assisted contact-tracing solution. These include the mobile operating system providers (these would be primarily Google and Apple but also Samsung, HTC and other who deploy their own versions of Android). The telecommunication providers (such as AT&T, T-Mobile, Verizon etc.). Or it could be national governments, health institutions or any third-party developer who believes has a ground-breaking solution to contact tracing using mobile technology.

An obvious approach which the government in Singapore embraced, is for the government to step up and offer a solution. The Singaporean government quickly developed (or endorsed) an app for contact tracing based on existing capabilities of smartphone operating systems . This utilizes Bluetooth with its focus primarily on utility rather than user privacy. A better solution would have been a provably privacy preserving app which uses existing capabilities of smartphone operating systems to determine whether its user came in contact with a confirmed COVID-19 case (there exist an effort from European-based researchers toward this [6]). The big challenge with such approaches is getting universal coverage, and fast. For contact tracing to be useful, the majority (if not all) of the population should use it. Assuming strict checks at country boundaries, population in this case would be the population of a country or travel bloc (like EU or Schengen Area). In other words, how do you convince people to download and install such an app. Moreover, what stops another 10,20, or even 100 application developers from offering their own solutions. We could potentially address this through nationally coordinated efforts (see NHS app etc.) with the support of the government and the media. In addition, this coverage should be achieved fast. The economic implications of lockdown are of paramount importance to all nations and businesses of all sizes. Getting coverage fast would allow relaxing lockdown measures and minimizing the time before returning to normality.

Telecommunication providers on the other hand, already have their subscribers so they could in a way “force” contact-tracing by leveraging their vantage point on users’ devices. This would achieve good and fast coverage. Telecom operators have preinstalled apps on their subscribers’ devices. These preinstalled apps have “special” privileges on the device. However, this solution would create fragmentation across providers and we would need some sort of agreement for data exchange between them for this to be meaningful, with all the ethical, privacy and trust issues this would entail.

On the other hand, we have the mobile operating system providers. Google is responsible for the Android Open Source Project (where the Android operating system comes from) which enjoys more than 85% of the mobile operating system market share. Combine that with Apple which globally owns 13% of the mobile OS market share and we nearly achieve a global coverage of smartphone users. The recent and unprecedented agreement between Apple and Google [5], ensures that contact tracing can be enabled on almost all smartphone devices, instantaneously (it would merely need users to agree to a software update). Moreover , a solution offered by the mobile OS providers, tackles another big issue, that of fragmentation. This problem arises when multiple designs from different parties exist. This is problematic because it makes identifying and fixing security and privacy issues across solutions more challenging while at the same time it increases the probability to have an implementation fault that might lead to a vulnerability. Lastly, these providers, already have access to fine-grained location information of users anyway – when they use it and how that’s a story for another time.

Who to trust? Less is More!

Any of the above is a plausible solution. It is debatable though what is the right approach. Mobile OS providers could solve both the coverage and fragmentation issue. However, (1) any potential vulnerabilities in the implementation of the proposed design would instantaneously affect all device owners (that’s a big part of the population); and (2) the solution should not be “forced” to their users. Regarding (1), Apple and Google seem to take on the responsibility of handling the security of the implementation to avoid fragmentation issues from different third-party developer implementations. This is important since as we repeatedly observed in the past [7, 8, 9] mobile apps tend to either take security for granted or just do a very bad job implementing it. Obviously, this responsibility should not be taken lightly. We have also found design and implementation issues at the operating system level which allowed for a number of security and privacy issues to manifest [10, 11, 12]. We believe that the best way to prevent or quickly address any of these is transparency (or open design) which would allow input and scrutiny of such designs and implementation. Although design documents have been released, unfortunately the implementation does not always abide to the design. Thus, we call for immediate code releases of relevant implementation (system services, networking protocol etc.) from both providers.

Regarding (2), Google and Apple, delegate the liability to the application developers (NHS, Singapore Gov app etc.). While the security of the implementation is undertaken by the OS, it will be the responsibility of the app developers to initiate scanning, sharing the keys with the centralized server and downloading the (encrypted) keys of the diagnosed individuals [13]. These third-party apps would also be able to get the result of tracing, that is, they could know if the device owner has been exposed to a confirmed infected individual; also they would know whether the device owner is infected themselves, since reporting will most likely happen through the app, although with the implicit consent of the user. Thus, while offering some functionality at the OS level can warrant privacy of other people (at least this is the claim), users are not necessarily guarded from the app or its server (again approaches like in [6] would be needed to mitigate this).

Trust Levels: 3rd party apps, privileged apps and the operating system

Putting everything into perspective, there are different levels of trust involved which we need to consider when evaluating a smartphone-assisted solution. A secure system design would try to minimize the (TCB) trusted compute base (or the components of a system that are trusted to perform an operation securely).

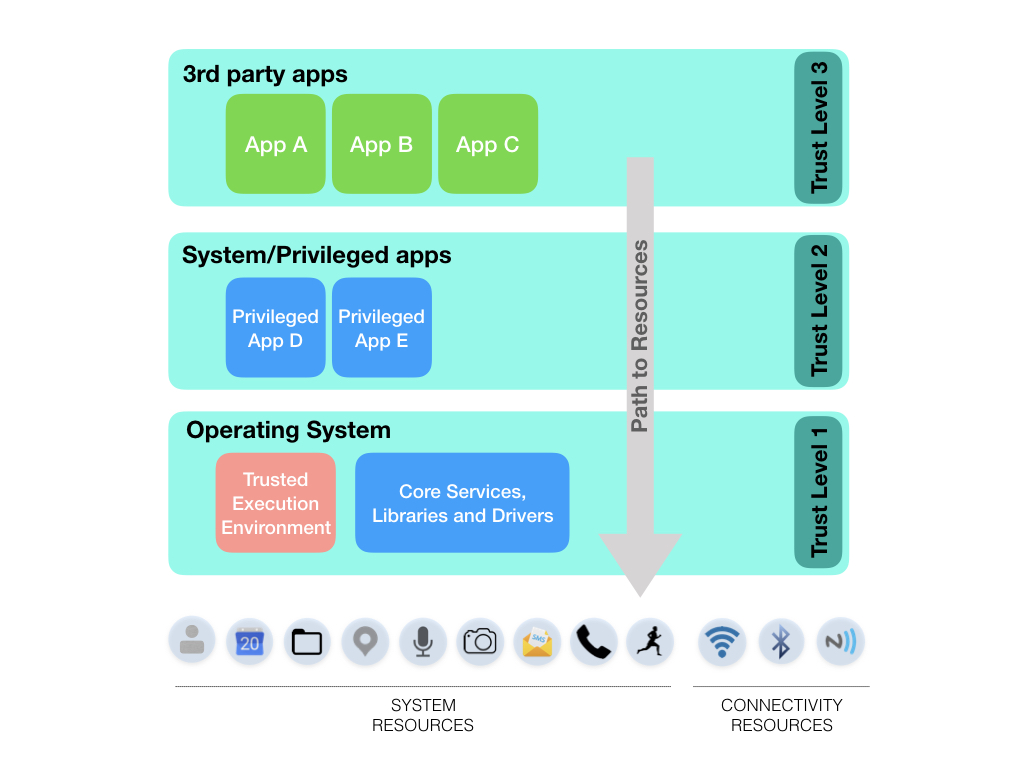

Figure 1, illustrates the different components of a smartphone system in this context. There is a hierarchical relationship of trust levels with the lowest part of the operating system enjoying the highest levels of trust encompassing all other trust levels. Let’s try a layer by layer analysis to showcase this.

At the top, are the third-party applications. These are the ones we could get from Google Play and Apple Store. Third-party apps are isolated from each other (side channels still exist which can break those barriers) and from the rest of the operating system. But, as far as the operating system is concerned, they have the same level of trust. Therefore, if we want to allow a third-party app the capability to perform contact tracing, we are essentially allowing all third-party apps to do the same (they have the same privileges across the device – see Trust Level 3 on Figure 1). This can happen already if apps start using Bluetooth or location information on a smartphone directly.

When a third-party app is accessing a resource (let’s say Bluetooth in this case) the execution path goes through the operating system. In particular, to use Bluetooth (access what is read by the Bluetooth receiver hardware), the app needs to request the Bluetooth permission from the operating system. However, on Android this permission has a protection level normal. What this means is that the permission is granted automatically (without user consent) to the requesting app. Also, when an app is accessing Bluetooth readings, these are delivered to the app through the operating system, which in addition has access to an applications’ memory, files and operations. To put this simply, whatever the app accesses, the operating system can access too.

Choosing to trust the telecom providers to do this, improves things (reduces the trusted compute base or TCB). Why? In a mobile operating system (for example Android), access to sensitive parts of the system is granted by looking at the cryptographic keys used to sign the requesting app. Third-party apps (apps you would get from Google Play) are usually signed with their own (self-signed) developer keys. Telecommunication provider apps and other system partner apps (e.g. a keyboard app) are signed by the platform key and come preinstalled to your phone. In other words, by trusting them we also trust the operating system who uses the same platform key. However, we can differentiate between them and third-party apps that use different keys. Unfortunately, trusting telecommunication provider apps or other privileged apps with the system keys, comes with its own security threats. Essentially trust level 1 and 2 in Android are equal. Therefore, vulnerabilities in system partner apps can allow an adversary to elevate their privileges at the same level as the operating system [14].

Then, we have the operating system. When it comes to Android-supported devices, this would be the Android middleware, core system services and libraries and its underlying Linux kernel. The Android operating system is developed by the Android Open Source Project (AOSP), but it is essentially controlled by Google: even though virtually anyone can contribute to the development, the last word falls to Google engineers when it comes to accepting those contributions to become part of the operating system. Note here that the version of Android deployed by Google is then modified by different OEMs such as Samsung, HTC etc. before being deployed on those devices. There are some devices such as the Pixel series which run a “pure” or unmodified version of the AOSP. The proposal by Google and Apple, is that the OS would be responsible for employing a new version of the Bluetooth software stack to allow for contact-tracing specific Bluetooth device discovery in a way which the actual Bluetooth identifiers are deanonymized before broadcasted to other devices and made available to third-party apps. The operating system would be responsible for generating those identifiers, encrypting them, broadcasting them, storing them and delivering their encrypted form to third-party apps. It would also perform the matching operation: a third-party app would be able to query the operating system with a set of encrypted identifiers known to belong to the devices of some individuals of interest (in this case confirmed COVID-19 cases), without knowing those individuals. The operating system would then check if it has seen those identifiers in the past and inform the querying app. With this, we trust the operating system to perform those sensitive operations to hide the device identifiers and hide that from third-party apps, only providing them with the end result (this device has been in proximity of that identifier or not).

We could reduce the TCB even more by employing trusted hardware. This would ensure that relevant cryptographic keys are never made accessible even to parts of the operating system itself. This ensures that even when the OS is compromised (see rooted devices by malicious users, rootkit applications, vulnerable system apps etc.) the keys are still secure. We could even employ remote attestation to guarantee that the server accepts requests only from devices whose integrity can be (with some confidence) guaranteed. Unfortunately, not all smartphones have the capability to support such solutions.

Three Necessary Security Measures

What the above analysis shows, is that by offering contact tracing at the OS-level, we can achieve great coverage fast, reduce fragmentation of implementation and significantly reduce the trusted compute base.

The principal issue with “allowing” mobile OS providers to provide support for contact tracing, is that once this functionality is being implemented, it would be difficult to remove and could potentially be overused after the pandemic. In particular, what guarantees do users get that this new capability will not be exploited after the pandemic to trace people interactions by OS providers (or by third-party developers at a higher level) who would then use it for advertising purposes (Google’s main revenue stream is from advertising) [15] or share it with a respective government, intelligence or national security agency [16]?

Below we propose three simple technical measures mobile OS providers should take. Together these measures employ a defense-in-depth approach aiming to give users full control of the added functionality while protecting unintended privacy leakage to untrusted applications. Our measures are complimentary to the already announced specifications [13, 17].

1. Control to the User

Tracing capability should by-default be disabled and only enabled by the user.

Users should be in full control of this new capability for contact detection. To enable this, we need to decouple the new BLE service (UUID 0xFD6F) from other Bluetooth services. Users should be given the ability through device configuration settings to completely turn off/on this capability. This obviously should be on an opt-in basis rather than opt-out: default status should be OFF.

Why? Otherwise any application with the Bluetooth permission (automatically granted on Android) would be in principle able to leverage the new capability [7, 8]. We are against just adding a new permission which would essentially contribute to the permission bloat problem: users are habituated by security warnings, they don’t always understand what a permission entails and rarely have the necessary contextual cues available to make a correct decision [18, 19].

2. Privileged Operation

Tracing capability should only be available to a trusted app.

Use of the new service shouldn’t be available to any third-party app for any purpose. Since we are integrating an operation in smartphones which can enable inference of very sensitive personal data (social interactions, potentially exact location through those interactions and meta-data, etc.); this operation should require special privileges. We could realize that by finally splitting Trust Level 1 and 2 (see Figure 1). How? Apple, Google and other OEMs can provide trusted organizations (e.g. NHS in the UK) with unique cryptographic keys for signing their apps. The operating system could use these keys to ensure only those apps make use of the new BLE service. Thus, we are making sure that under no circumstances third-party apps (curious apps, phishing apps that look like the legitimate one or being advertised as a healthcare or government apps etc.) can utilize this functionality. For the tech-savvy, enforcement of this security policy can happen through SELinux on Android.

3. Isolation of execution

Applications using tracing should be executed in an isolated part of the operating system.

A malicious app could leverage side-channels (by monitoring CPU, Power, Memory usage) to infer the operations performed by the trusted app. To tackle this, the trusted apps to use the new service should be executed in their own isolated environment. We could leverage compartmentalization techniques such as Android for Work or Samsung KNOX to enable this.

Moreover, the new Service (i.e. Contact Detection Service [13]) should be isolated from other components of the OS. SElinux policies and enforcement can help us realize that on Android. Lastly, on devices with support for Trusted Execution Environment (TEE) we could further ensure that key generation, storage and core security operation are performed inside the TEE.

Our measures further reduce the risk of unnecessary information exposure while providing meaningful user controls.

References

[1] https://play.google.com/store/apps/details?id=nic.goi.aarogyasetu

[2] https://www.gov.sg/article/help-speed-up-contact-tracing-with-tracetogether

[3] http://news.mit.edu/2020/bluetooth-covid-19-contact-tracing-0409

[4] https://www.bbc.co.uk/news/technology-52263244

[6] https://github.com/DP-3T/documents

[7] Naveed, Muhammad, et al. “Inside Job: Understanding and Mitigating the Threat of External Device Mis-Binding on Android.” NDSS. 2014.

[8] Demetriou, S., et al. “What’s in your dongle and bank account? mandatory and discretionary protection of android external resources.” NDSS, 2015.

[9] Demetriou, Soteris, et al. “HanGuard: SDN-driven protection of smart home WiFi devices from malicious mobile apps.” Proceedings of the 10th ACM Conference on Security and Privacy in Wireless and Mobile Networks. 2017.

[10] Zhou, Xiaoyong, et al. “Identity, location, disease and more: Inferring your secrets from android public resources.” Proceedings of the 2013 ACM SIGSAC conference on Computer & communications security. 2013.

[11] Lee, Yeonjoon, et al. “Ghost Installer in the Shadow: Security Analysis of App Installation on Android.” 2017 47th Annual IEEE/IFIP International Conference on Dependable Systems and Networks (DSN). IEEE, 2017

[12] Tuncay, Güliz Seray, et al. “Resolving the predicament of android custom permissions.” NDSS, 2018.

[14] https://www.cvedetails.com/vulnerability-list/vendor_id-13806/Swiftkey.html

[15] https://computer.howstuffworks.com/internet/basics/google4.htm

[16] https://en.wikipedia.org/wiki/FBI%E2%80%93Apple_encryption_dispute

[18] Felt, Adrienne Porter, et al. “Android permissions demystified.” Proceedings of the 18th ACM conference on Computer and communications security. 2011

[19] Felt, Adrienne Porter, et al. “Android permissions: User attention, comprehension, and behavior.” Proceedings of the eighth symposium on usable privacy and security. 2012

Recent Comments